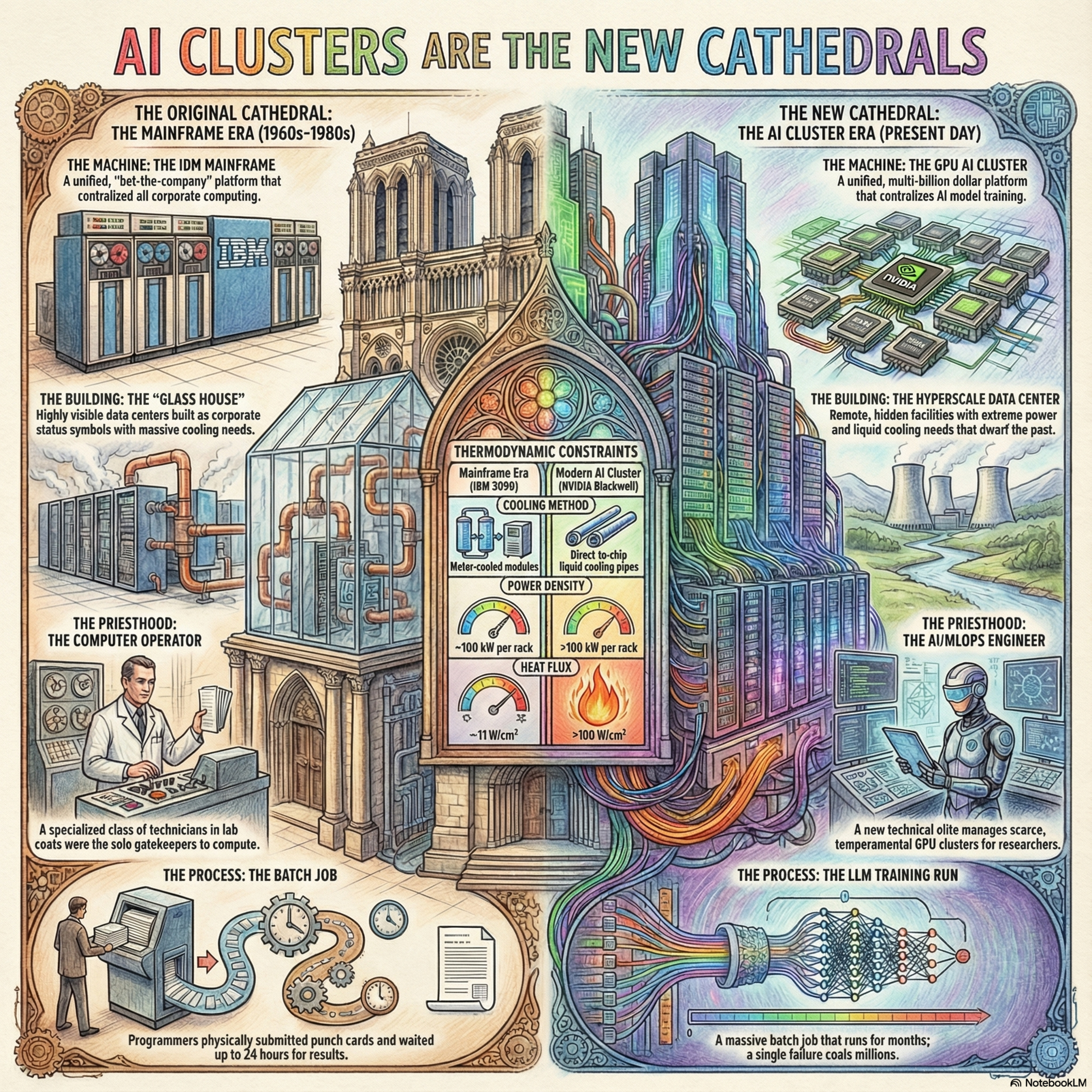

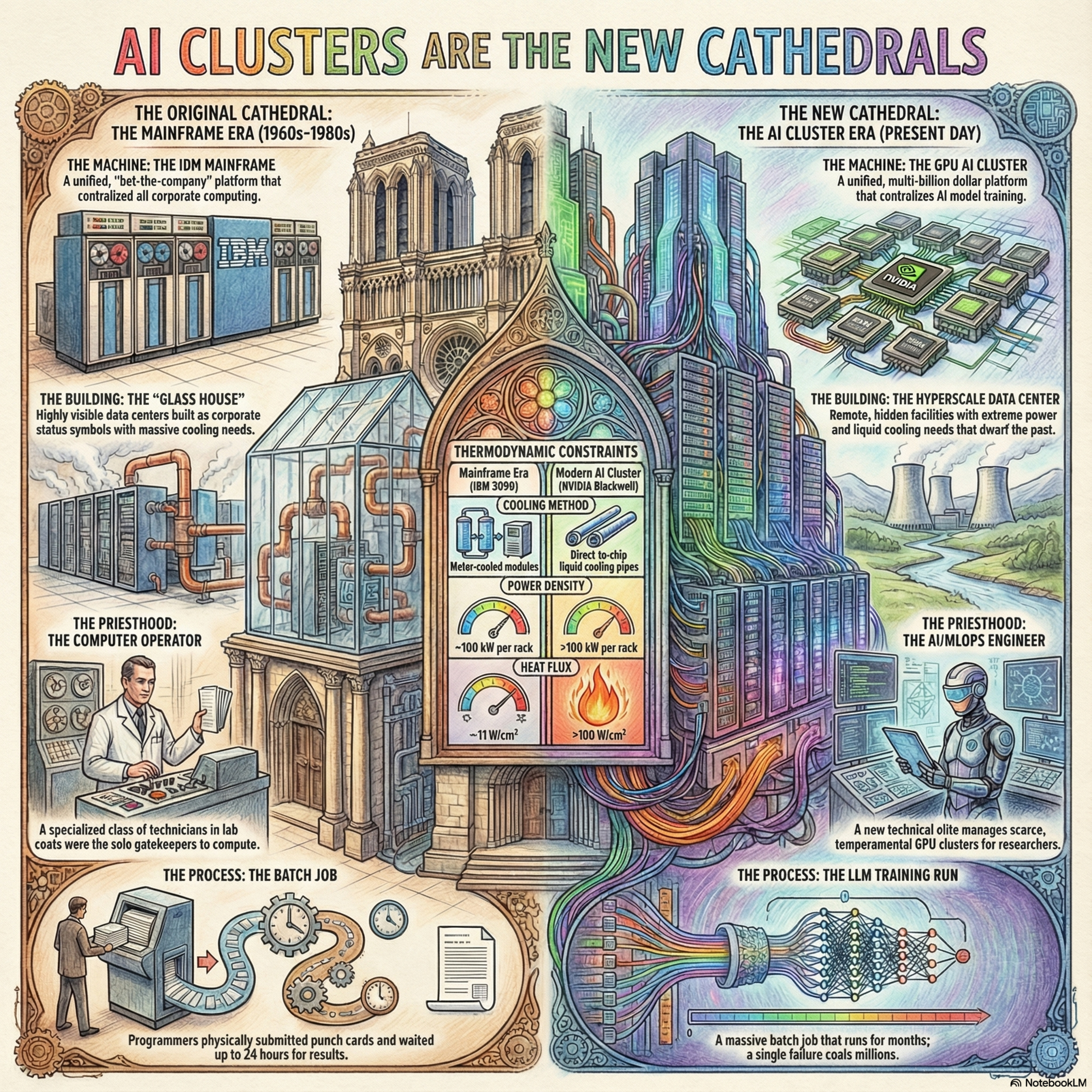

Rise of the Machine : AI clusters are the new Cathedrals.

EpisodeDec 8, 202540m

Open Source Research MosaicThe history of enterprise computing shows a consistent oscillation between centralization and decentralization, scarcity and abundance. The foundational era saw the dominance of the mainframe, described as the "Cathedral" or the "Glass House," which demanded massive upfront capital expenditure,. These systems were large, physical installations that required stringent environmental control, an engineering challenge related to heat management that has forcefully returned in the modern AI era,. Access to these scarce resources was controlled by a specialized group of operators, forming a "Priesthood",. Programmers submitted their work in the form of punch cards, leading to a culture of high-latency batch processing where turnaround times could be a day or more.The minicomputer and workstation eras (led by companies like Digital Equipment Corporation and Sun Microsystems) reversed this trend, democratizing access and placing compute power directly on the user's desk,. However, the current artificial intelligence build-out represents a dramatic structural regression back to the centralized, capital-intensive model of the 1960s,. The economics of creating large language models necessitates massive GPU clusters—the new Mainframes—which reside in hidden hyperscale data centers, effectively rebuilding the centralized "Glass House",. The training of these models is a massive, resource-intensive batch job that runs for months, mirroring the high-latency feedback loop of the mainframe era. The scarcity of compute cycles forces the use of specialized MLOps engineers, the modern equivalent of the computer Priesthood,.Nvidia’s unprecedented strategic victory was achieved by recognizing that the future of computing would be accelerated, not sequential,. While competitors focused on the traditional processor wars, Nvidia invented the software platform CUDA, the essential bridge that unlocked the immense parallel processing power of the GPU for general use beyond graphics,. This sustained investment in a full software ecosystem created a powerful, proprietary "moat" that locks developers into the platform,.Historically, this technological boom is accompanied by familiar patterns of public anxiety and financial risk. The widespread fears today concerning artificial general intelligence (AGI) and job loss are a near-perfect repetition of the "Automation Anxiety" that gripped the public in the 1960s, which ultimately led to a transformation of labor rather than its cessation. Financially, the concentration of investment in today's leading technology companies mirrors the "Nifty Fifty" stock bubble of the 1970s, a period that proves that even dominant, vital companies can experience disastrous value collapse when purchased amidst market hysteria. Furthermore, the specialized AI chip architectures being developed today face a historical threat: in the 1980s, technically superior specialized Lisp machines were eventually defeated by the volume economics of general-purpose chips whose speed quickly caught up,.Nvidia is now leveraging its full-stack platform to conquer new domains, applying its dominance in training to the physical world of robotics and autonomous vehicles, using the ambitious Omniverse platform as the unifying simulation environment,. Despite its current dominance, Nvidia faces formidable challenges from customers like cloud providers who are designing their own custom AI chips to reduce dependency, and from competitors championing open-source software alternatives to break the proprietary CUDA ecosystem,. The modern AI boom is thus the reconstruction of the Cathedral—larger and faster than before, but fundamentally a return to the centralized model of the past.#AICathedral #AcceleratedComputing #Nvidia #CUDA #TechHistory #AIBoom #AutomationAnxiety #NiftyFiftyBubble #CentralizationReversal #ProprietaryPlatform #dotcomBust

Top comments